Robots cannot see past barriers, much like humans. To reach where they’re going, they occasionally require a little assistance. Rice University engineers have created a technique that enables people to assist robots in “seeing” their surroundings and doing jobs. A unique approach to the age-old issue of motion planning for robots operating in surroundings where not everything is always clearly visible is the Bayesian Learning IN the Dark (BLIND) concept. The peer-reviewed study, which was led by computer scientists Vaibhav Unhelkar and Lydia Kavraki, as well as co-lead authors Carlos Quintero-Pea and Constantinos Chamzas, was presented in late May at the International Conference on Robotics and Automation of the Institute of Electrical and Electronics Engineers.

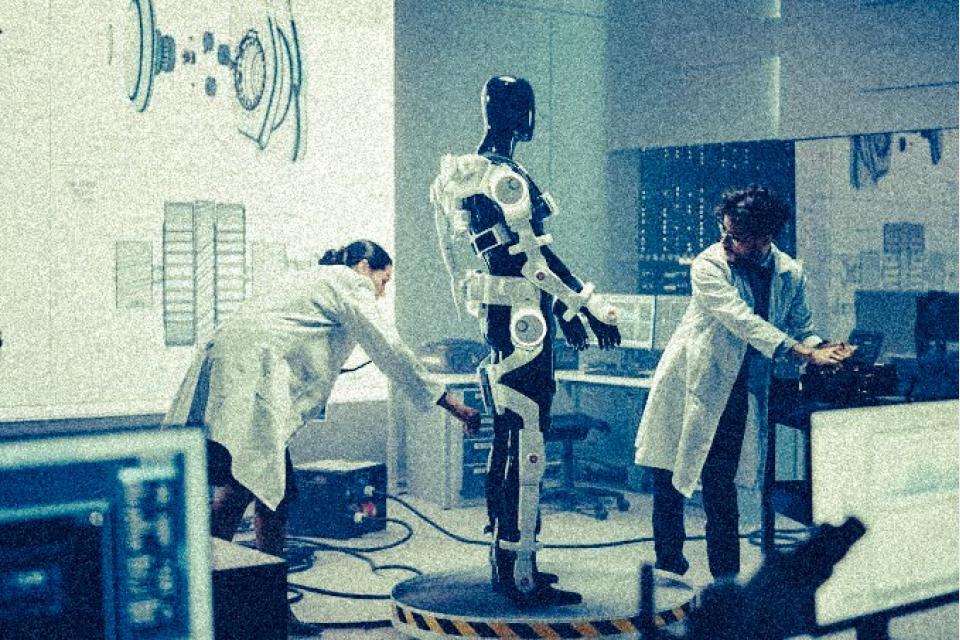

According to the report, the program, which was principally created by graduate students Quintero-Pea and Chamzas who collaborated with Kavraki, maintains a human in the loop to “augment robot perception and, significantly, prevent the execution of dangerous movements. “To help robots that have “high degrees of freedom,” or numerous moving components, they did this by combining Bayesian inverse reinforcement learning, which enables a system to learn from continuously updated information and experience, with well-established motion planning techniques. The Rice lab used a Fetch robot, an articulated arm with seven joints, to test BLIND by instructing it to walk across a barrier while grabbing a tiny cylinder from one table and moving it to another.

Quintero-Pea asserts that having additional joints makes it harder to educate the robot. When directing someone, all you have to say is, “Lift your hand. “However, when obstacles block the robot’s “view” of its goal, a robot’s programmers must be exact about how each joint should move at every stage of the robot’s trajectory. Instead of planning a route, BLIND incorporates a human mid-process to alter the choreographic options—or best guesses—suggested by the robot’s algorithm. Quintero-Pea stated that “BLIND allows us to gather information in the human brain and compute our trajectories” in this high-degree-of-freedom area. “We use a specific feedback method we call critique, which is simply a binary kind of feedback where the person is given labels on fragments of the route, he added.

These labels appear as a network of possible pathways represented by linked green dots. Each step that BLIND takes as it advances from dot to dot is accepted or rejected by the human to fine-tune the path and successfully avoid obstacles. The interface is easy for people to use since we can tell the robot, “I like this,” or “I don’t like that,” according to Chamzas. After being given the go-ahead for a sequence of actions, the robot may finish its task, he added. One of the most important issues in this argument, according to Quintero-Pea, is how difficult it is to represent human desires using a mathematical formula. “Our work simplifies interactions between people and robots by incorporating human preferences. I think that’s how applications will work.

A novel methodology developed by MIT and Microsoft researchers identifies instances in which autonomous systems have “learned” from training samples that don’t reflect what happens in the real world. Engineers may employ this idea to improve the security of robots and autonomous vehicles that use artificial intelligence. For instance, to prepare them for nearly every eventuality on the road, the artificial intelligence (AI) systems that drive autonomous cars go through extensive training in virtual simulations. But occasionally the car makes an unforeseen error as a result of a situation that ought to alter the way it acts but doesn’t.

Consider an autonomous car without the necessary sensors, which would be unable to discern between drastically different conditions like large, white cars and ambulances with red, flashing lights on the road. A driver may not know to slow down and pull over when an ambulance starts its sirens as it is traveling down the highway because it cannot tell the ambulance from a huge white sedan.

Like with conventional methods, the researchers trained an AI system using simulations. The system is then closely watched by a person while it functions in reality, who offers feedback whenever a mistake was made or was going to be made. The researchers then combine the training data with the feedback from humans and develop a model using machine learning to identify circumstances when the system is most likely in need of more guidance on how to behave appropriately. Through the use of video games and a simulated human correcting an on-screen character’s learning course, the researchers confirmed their methodology. The next stage, however, is to integrate the model with conventional training and testing procedures for robots and autonomous vehicles with human feedback.

The system essentially has a list of circumstances and, for each event, several labels indicating whether its actions were acceptable or unsatisfactory after the feedback data from the human is collated. Because the system views numerous circumstances as being similar, a single scenario may get several distinct signals. For instance, an autonomous vehicle may have repeatedly driven next to a big vehicle without stopping and slowing down. But only once does an ambulance drive by that the system perceives as being precisely the same. The autonomous vehicle doesn’t stop and is informed via feedback that the system performed an inappropriate activity.